I am Nilesh Patel By Profession as SEO.Professional SEO, SMO, SEM, SMM, PPC, Web Promotion, Online Marketing, Website Marketing and Internet Marketing Services Provider.

Thursday, December 31, 2015

Friday, December 18, 2015

3 Reasons why SEO is one of the Best Investments for your Business

Over the past few years many misconceptions have emerged concerning Search Engine Optimization. But which are some of the most misleading thoughts we have heard about SEO during the last year or so?

“SEO is dead; the social media guru proclaimed.”

“I don’t want SEO. I just want a good looking website similar to my competitor’s one.”

“Just built it and they will come.”

Such misconceptions still reside inside the heads of online marketing

experts, business owners and business executives as a part of an overall digital mindset.

While I don’t feel sorry for the online marketing gurus that are just

parroting the latest buzz words, tactics and magnify the above plus 100

more misconceptions, I do feel strong about my mission; My duty to

inform the well-intended businesses that SEO still stands in 2015 at the forefront of investments for businesses that wish to increase their online presence.

SEO is an Investment

We can define SEO as an investment as it takes time and money to attract quality organic traffic through search engines. If money and time are properly invested then SEO will increase ROI due to the following three critical factors:

SEO is an Investment

We can define SEO as an investment as it takes time and money to attract quality organic traffic through search engines. If money and time are properly invested then SEO will increase ROI due to the following three critical factors:

Accumulation

If you are building a new website from scratch the initial organic traffic will be zero.

Even with websites that have been in existence for years, the organic

traffic can remain quite low if no or improper SEO strategy has been

implemented.

And even though this may sound very discouraging, in reality it is a very positive thing for small businesses that are willing to implement a long term SEO strategy.

The reason for this is the compound rate effect and how it relates to SEO. For the websites of small businesses it is not uncommon to experience a 30%, 50% or even 100% growth in organic traffic per year.

So, imagine that even if the website that you are working on has

only 100 unique visitors from organic (SEO) traffic per month, it is

quite realistic to expect that in 3-5 years this same website can have

500, 700 or even over 1.000 visitors per month. This can be achieved with the implementation of continuous “white-hat” SEO actions due to the compound rate effect that helps websites accumulate increasingly higher levels of organic traffic over time with the same amount of SEO efforts.

Multiplication

As users we tend to perform our research through search engines by using many different keyword combinations so as to fulfill our needs.

Multiplication

As users we tend to perform our research through search engines by using many different keyword combinations so as to fulfill our needs.

While there is no sure-fire way to know beforehand the exact queries (search terms) that each user will enter into a search engine; what you can do is take advantage of the multiplier effect and essentially rank your website for thousands of search terms while targeting only a much smaller subset of these phrases.

In order to achieve that you have to exploit search engine algorithms

to your advantage. While this sounds quite illegal and black-hat all it

actually means is to invest in a long term “white-hat” SEO plan.

A well SEO optimized website for a certain subset of keyword terms that are relevant to the website’s niche is going to automatically reproduce 100s of different keyword terms that your website will rank for.

Longevity

Anyone that has active paid campaigns, like a Google Adwords campaign, knows that in the moment that the campaigns are off, the paid traffic that flows to the website dries up as well.

Longevity

Anyone that has active paid campaigns, like a Google Adwords campaign, knows that in the moment that the campaigns are off, the paid traffic that flows to the website dries up as well.

In contrast, an investment on SEO produces long term traffic that can be retained, after it has been built, even with minimal efforts in certain niches.

Even in competitive niches the most difficult part is to grow the SEO traffic for early stage websites or for websites that haven’t performed any SEO actions for years.

On the other hand well optimized websites with active SEO management can dominate the SERPs rankings much easier for the long haul with a certain level of SEO actions.

In conclusion, the accumulation factor through the compound rate effect will allow organic (SEO) traffic to pile up as the time progresses. The multiplication factor will allow the keyword terms to multiple automatically and as a result your website’s “keyword terms army” will grow and dominate the SERPs as time unfolds. Finally, the longevity factor will enable SEO traffic to produce (pay) dividends in the form of future – long term – organic traffic.

This trifecta of factors proves that SEO is rightfully considered as one of best investments for your business…and is still very much alive!

Saturday, August 08, 2015

Google Panda 4.2 Is Here; Slowly Rolling Out After Waiting Almost 10 Months

Google says a Panda refresh began this weekend but will take months to fully roll out.

Google tells Search Engine Land that it pushed out a Google Panda refresh this weekend.

Many of you may not have noticed because this rollout is happening incredibly slowly. In fact, Google says the update can take months to fully roll out. That means that although the Panda algorithm is still site-wide, some of your Web pages might not see a change immediately.

The last time we had an official Panda refresh was almost 10 months ago: Panda 4.1 happened on September 25, 2014. That was the 28th update, but I would coin this the 29th or 30th update, since we saw small fluctuations in October 2014.

As far as I know, very few webmasters noticed a Google update this weekend. That is how it should be, since this Panda refresh is rolling out very slowly.

Google said this affected about 2%–3% of English language queries.

The rollout means anyone who was penalized by Panda in the last update has a chance to emerge if they made the right changes. So if you were hit by Panda, you unfortunately won’t notice the full impact immediately but you should see changes in your organic rankings gradually over time.

This is not how many of the past Panda updates rolled out, where typically you’d see a significant increase or decline in your Google traffic more quickly.

Panda Update 30 AKA Panda 4.2, July 18, 2015 (2–3% of queries were affected; confirmed, announced)

For More Info about Google Panda 4.2

Many of you may not have noticed because this rollout is happening incredibly slowly. In fact, Google says the update can take months to fully roll out. That means that although the Panda algorithm is still site-wide, some of your Web pages might not see a change immediately.

The last time we had an official Panda refresh was almost 10 months ago: Panda 4.1 happened on September 25, 2014. That was the 28th update, but I would coin this the 29th or 30th update, since we saw small fluctuations in October 2014.

As far as I know, very few webmasters noticed a Google update this weekend. That is how it should be, since this Panda refresh is rolling out very slowly.

Google said this affected about 2%–3% of English language queries.

New Chance For Some, New Penalty For Others

The rollout means anyone who was penalized by Panda in the last update has a chance to emerge if they made the right changes. So if you were hit by Panda, you unfortunately won’t notice the full impact immediately but you should see changes in your organic rankings gradually over time.

This is not how many of the past Panda updates rolled out, where typically you’d see a significant increase or decline in your Google traffic more quickly.

Panda Update 30 AKA Panda 4.2, July 18, 2015 (2–3% of queries were affected; confirmed, announced)

For More Info about Google Panda 4.2

Wednesday, May 20, 2015

Penalised by Google? Here are 11 reasons why and how to recover

Search engines become smarter all the time. Google is constantly

improving its algorithms in order to give users the highest quality

content and the most accurate information.

Algorithms follow rules. Uncovering those rules – and taking advantage

of them – has been the goal of anyone who wants their website to rank

highly. But when Google finds out that websites owners have been

manipulating the rules, they take action, by issuing those websites with

a penalty. The penalty usually means a drop in rankings, or even an

exclusion from Google’s index.

As a website owner you sometimes know which rule you’ve broken. But for

many it’s hard to work out. Read our guide below to find out where

you’ve gone wrong and what you can do about it.

Table of contents

- Buying or selling links that pass PageRank

- Excessive link exchange

- Large-scale article marketing or guest posting campaigns

- Using automated programs or services to create links

- Text advertisements that pass PageRank

- Advertorials or native advertising where payment is received for articles that include links

- Links with optimized anchor text in articles or press releases

- Low-quality directory or bookmark site links

- Keyword-rich, hidden or low-quality links embedded in widgets

- Widely distributed links in the footers or templates of various sites

- Forum comments with optimised links in the post or signature

What is a Google penalty?

Google has been tweaking and improving its ranking algorithms since end

of year 2000. That’s when it released its toolbar extension and when PageRank was released in a usable form.

Since then, Google continued to work on the quality of search results it

showed to users as a result of their search queries. With time, the

search engine giant began to remove poor quality content in favour of

high quality, relevant information which it would move to the top of the

SERPs. And this is when penalties started rolling in.

Next was the Penguin update which was rolled out in 2012 and hit more

than 1 in 10 search results. These algorithm changes have forced site

owners to rethink their SEO and content strategies to comply with

Google’s quality requirements.

How to tell if you’ve been penalised

To discover the reasons you might have been penalised, you can watch this video and skip to the next section, or just keep reading.

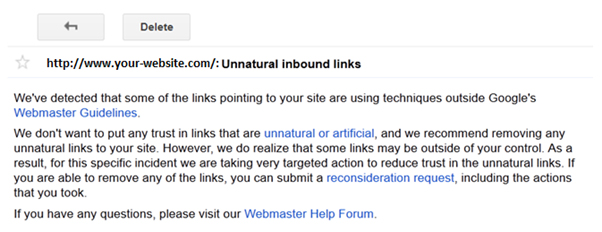

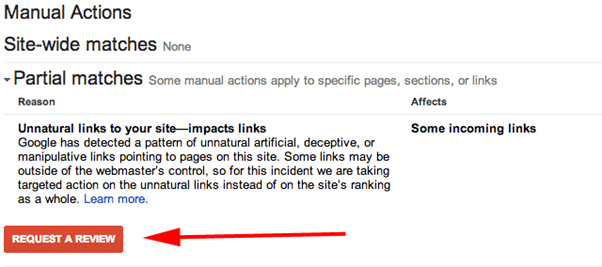

A penalty can be either automatic or manual. With manual penalties,

you’ll probably be notified that you’ve been doing something wrong that

needs to be fixed as soon as possible.

However, if the cause is a change of the algorithm, you may not always know you’ve been targeted.

Here are some clues you’ve been penalised by Google:

- Your site isn’t ranking well not even for your brand name. That’s the most obvious clue as your site should always rank well on that one keyword.

- If you’ve been on page one of Google’s search results and are dropping to page two or three without having made any changes.

- Your site has been removed from Google’s cached search results from one day to another.

- You get no results when you run a site search (eg: site:yourdomain.co.uk keyword).

- You notice a big drop in organic traffic in your Google Analytics (or any other monitoring tool you’re using) especially a few days after a big Google update.

If you don’t have access to Webmaster Tools or Analytics, you can determine if you’ve been penalised by using one of the following tools:

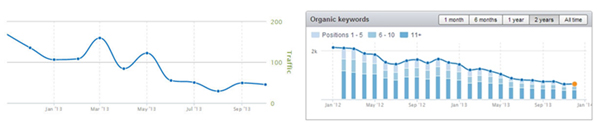

- SEMRush – Check if your search engine traffic is decreasing every day and also if the total number of keywords ranking top 20 is starting to decrease quickly:

If you notice one or more of the above factors, then you can be sure

that Google has penalised your site and you need to do something about

it quickly.

What caused the penalty?

When dealing with a penalty, the first thing you need to do is try to

figure out what caused it – spammy links, over-optimising your content,

etc. Only then can you follow the steps to try to fix it.

The most common issue is having bad, low quality backlinks pointing to

your site. To find out what links Google considers to be “bad

backlinks”, check out Google’s Webmaster Guidelines on Link Schemes.

1. Buying or selling links that pass PageRank

These backlinks are considered one of the worst type of links and

they’re the main reason why many large websites have received a penalty.

Although money doesn’t necessarily have to exchange hands, a paid

backlink can also refer to offering goods or services in return for a

link back and even sending free products without specifically asking the

customers for a link back to your site.

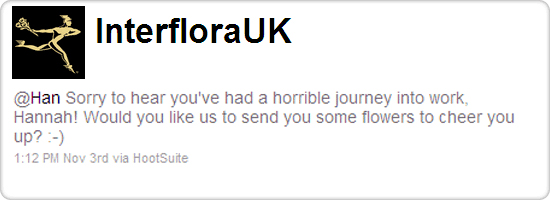

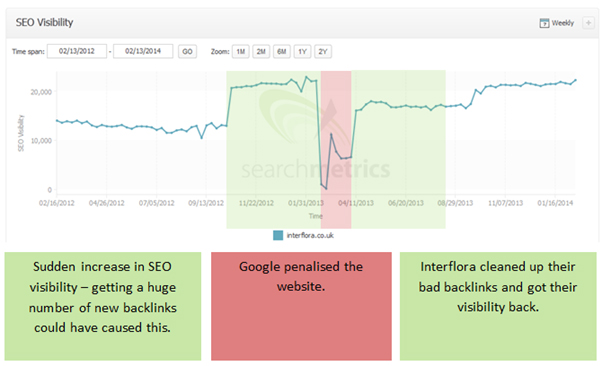

A good example of that is the Interflora incident.

The flower company sent out bouquets to make their customers feel better

after a hard day’s work. Happy to receive the surprise, some of the

customers wrote about the gesture on their own blogs/websites and linked

back to the flower company. While you’d think this was just a very nice

way of strengthening the relationship with their customers, Google

tagged it as a marketing technique of buying links and penalised

Interflora as a result.

2. Excessive link exchange

It’s common for company websites that are part of the same group to link

to each other. This is why Google declared war only on link exchange

done in excess. This is an old technique of getting backlinks as a

webmaster would get a backlink easier from a site owner if they also

returned the favour.

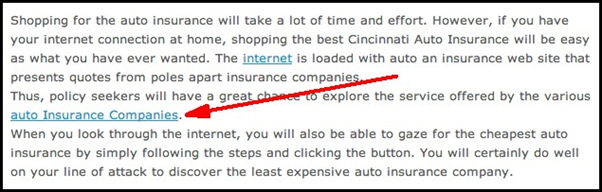

3. Large-scale article marketing or guest posting campaigns

There are numerous websites out there that are accepting article

submissions. So webmasters took advantage and started getting backlinks

by writing random articles and finding a way to add their own link.

However these articles were of very low quality which is why Google

decided to take action against those sites that were publishing articles

just for the sake of the backlinks.

Guest blogging was recently added on the list of don’ts mainly because

it has quickly become a form of spam. Webmasters keep sending emails to

all sorts of websites asking to submit their articles in exchange for a

link. Sounds a lot like all the other spam email you never asked for but

keep receiving, doesn’t it? And it works the other way around too.

Webmasters that manage many blogs found guest blogging to be a good way

to make money:

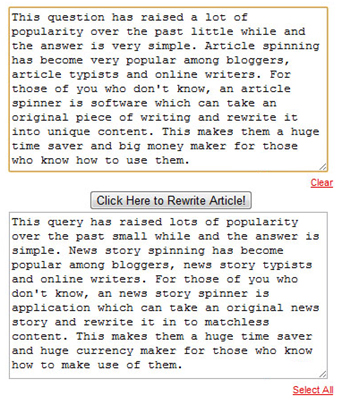

4. Using automated programs or services to create links

There are many tools used by black hat SEOs to create automated links,

such as ScrapeBox and Traffic Booster, but the most common tool is the

article spinner. In order to get content to add links, the spinner

helped webmasters get various pieces of content on the same subject

without having to pay someone to write them.

With such poor quality content around a link, it’s easy for Google to

realise this cannot in any way be considered a natural and earned

backlink.

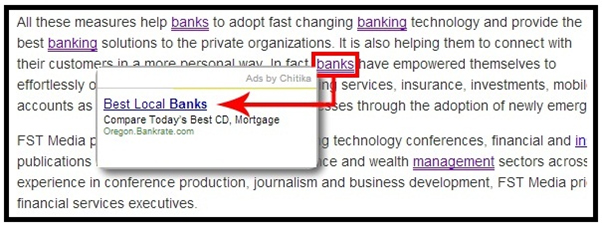

5. Text advertisements that pass PageRank

These types of links are created by matching a word to an advertisement.

In the example below, the article is about banks in general but when

you hoover over the word “bank” you get an ad to a specific business.

Since the presence of that business link is unnatural, this is one of the types of links that Google doesn’t like.

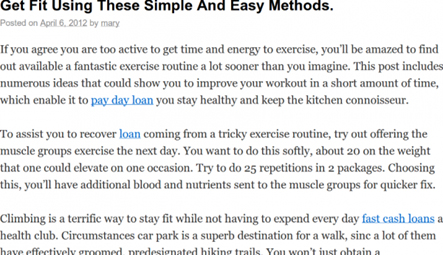

6. Advertorials or native advertising where payment is received for articles that include links

There are many websites out there filled with articles about various

products and each article has at least one link pointing to an

e-commerce website. The quality of the articles is rather low because

the sole purpose is to just create enough content around a link.

7. Links with optimized anchor text in articles or press releases

Similar to the previous one with the exception that instead of

contacting someone who has a website dedicated to building backlinks in

exchange for money, these links are added to articles and press releases

that get distributed over the internet through PR websites and free

article submission websites.

There are many wedding rings on the market. If you want to have a

wedding, you will have to pick the best ring. You will also need to buy

flowers and a wedding dress.

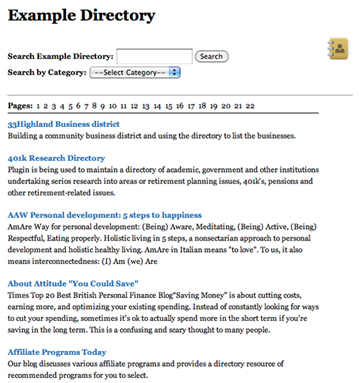

8. Low-quality directory or bookmark site links

Directories used to play an important role and represented an online

phonebook for websites. It was easier to find a website searching for it

by category. As search engine advanced, the need for these structured

directories decreased to the point where these are now being used for

link building purposes only.

9. Keyword-rich, hidden or low-quality links embedded in widgets

The best example is Halifax, the first bank that Google has hit. They

had these mortgage calculators embedded on various irrelevant websites

just for the backlink.

10. Widely distributed links in the footers or templates of various sites

Sitewide backlinks are to be avoided at all costs especially if the

links are on websites that are in no way related to your linked website.

If you place a link in the header, footer or sidebar of a website, that

link will be visible on all its web pages so don’t link to a website

that you don’t consider to be relevant for your entire content.

Most common footer links are either a web developer credit or a “Powered

by” link where you mention your CMS or hosting provider (see the

example below). There are also free templates out there that have a link

in the footer and most people never get that link out.

Sidebar links are usually Partner links or blogrolls. Since the word

“Partner” usually means that goods or services have been exchanged, this

can be considered a paid link so make sure to always use nofollow. As

for other related blogs, if they are indeed related, there should be no

issue but if your list has links to ecommerce websites or you’re using

affiliate links, you might want to nofollow those.

11. Forum comments with optimised links in the post or signature

Adding a signature link is an old link building technique and one of the

easiest ways of getting a link. It has also been done through blog

commenting. If you leave a comment on a forum or blog, make sure the

answer is not only helpful and informative but also relevant to the

discussion. If you add a link, it should point to a page that is also

relevant to your answer and the discussion.

Collecting the necessary data

Now that you know how to identify those bad links, let’s see how you can go about finding each and every one of them:

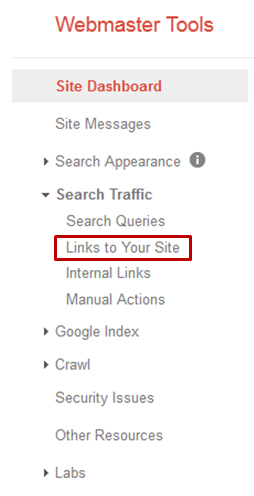

This is where Google shows you details on the backlinks it has indexed.

It should be your starting point in cleaning up your backlink profile as

it shows you how many backlinks each website is sending your way. This

is how you can find most of your site wide links – mark them down as

such.

2. Ahrefs, Majestic SEO, Link Detox, Open Site Explorer – Your choice

Depending on how many backlinks you have, you will probably need to pay for a good backlink checker tool.

Of course there are also free tools such as BackLinkWatch and AnalyzeBacklinks that you can use if you don’t have millions of backlinks in your profile.

The idea is to gather links from as many sources as possible as each

tool has its own crawler and can discover different backlinks. So, to

ensure you find as many as possible, it is indicated that you use

various tools.

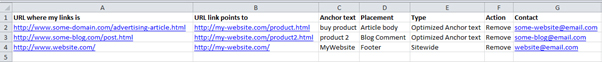

Next get all the reports in one file, remove the duplicates and see how

many links you’re left with. You can sort the remaining links by URL

(coming from the same domain) to find the ones that are sitewide, by

anchor text (to find the exact-match anchors or “money keywords”) or by

any other type of data that those tools provide such as discovery date,

on-page placement and link type.

If you paid for links in the past or engaged in any of the link schemes

Google frowns upon, try to find all the websites that still have your

links by using a footprint. For instance, if you created website

templates that you’ve distributed for free but have added a “Created by

MyCompany” link in the footer, use the link’s anchor to find all the

websites out there that have used your template.

If you’ve got a penalty but haven’t paid for links or engaged in any of

the link schemes, check if your links might be considered spammy because

of the websites they’re found on.

Not sure what spammy links are? Well, there’s no better classifier than

your own eye so if you want to check a potential bad site your link is

on, ask yourself:

- Does the site look like spam – low quality or duplicate content, no structure, no contact page, loads of outbound links?

- Does your link and anchor text look like it belongs on the site?

- Are there toxic links on the site – Gambling, Viagra etc.?

- Does the site look like it sells links – e.g. loads of anchor text rich sidebars and site-wide links?

If the answer is yes to any of the above questions, then your link should not be there.

When you go through the links, try to take notes and write down as much information as possible for each bad backlink.

Contacting webmasters

When you’ve checked every bad link and have all the information you

need, start contacting every webmaster and ask them to remove or

nofollow your links. Keep a copy of the email threads for each website

as you will need to show proof that you have tried to clean up the bad

links.

If a contact email is not present on their site, look for a contact

form. If that is not available, try a WHOIS for that domain. If they’re

using private registration then just mark it down as a no contact.

To make the process easier and save some time, here are a couple of automated email tools you could use:

- rmoov (Free to $99/month) – This tool helps you identify contacts, created and sends emails, follows up with reminders and reports on results.

- Link Cleanup and Contact from SEOGadget (free) – Download the bad links from Google Webmaster Tools and upload them into the tool. SEOGadget then shows you the anchor text and site contact details (email, Twitter, LinkedIn).

- Remove’em ($249 per domain) – This solution combines suspicious link discovery with comprehensive email and tracking tools.

Be very persistent. If you’ve sent out emails and didn’t get a response,

send follow-up emails after a couple of days. Even if you’re desperate

to get the penalty lifted, keep in mind that those webmasters don’t owe

you a thing and unless you’re polite and patient, you’re not going to

get what you’re after.

Be organised. Create an online Google spreadsheet where you add your Excel list and also new columns to show:

- The date of the first email

- Response to first email

- Links Status after first email

- The date of the second email

- Response to second email

- Link Status after second email

- The date of the third email

- Response to third email

- Link status after third email

If you don’t get a reply from a webmaster after three emails, they’ll probably never reply so it might be time to give up.

Add a column for the email threads. Copy/Paste the entire email

discussion between you and a webmaster in an online Google doc and use

its link in your main worksheet. Make sure to set the Sharing options to

“Anyone with the link” so the Google Webspam team members can access

these documents.

When you’re done, your online document should look something like this:

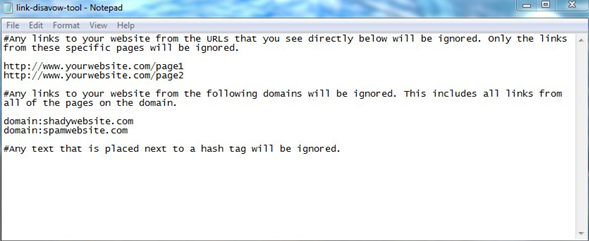

The disavow file

After you’ve finished contacting all the websites, you’re now left with

the ones that haven’t replied, asked for money to remove the link or

with no contact details.

You should take these websites and add them to the disavow file.

You can add individual pages from a domain or the entire domain itself.

Since it’s not likely that a webmaster would create a bad link from one

page and a good link from another page pointing to your site, you’re

safer just disavowing the entire domain.

Use hashtags if you want to add comments such as:

# The owners of the following websites asked for money in order to remove my link.

# These are websites that automatically scrape content and they’ve also scraped my website with links and all.

# The webmasters of these websites never replied to my repeated emails.

URLs are added as they are and domains are added by using

“domain:domain-name.com” to specify that you’re disavowing all the links

that come from a specific domain.

This should be a simple .txt file (you can use Notepad). When you’re done, go to Webmaster Tools and upload it.

If you need to add more sites to an already submitted disavow file, you

will need to upload a new file which will overwrite the existing one –

so make sure the disavowed domains from your first list are also copied

in your second list.

Be very careful when using the disavow file. Don’t add full domains such

as WordPress or Blogger.com just because you had links from a subdomain

created on one of these platforms.

Also, add a domain to the disavow file only after you have tried your

very best to remove the link (and can show proof you’ve tried). Google

isn’t happy for you to renounce the link juice from certain websites, it

wants to see that you’ve also tried your best to clean up the internet

of all of your spammy links.

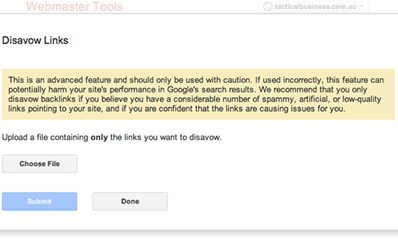

The Reconsideration Request

When you have the final status for every website that sent a bad link to

you and have also submitted your disavow file, it’s time to send Google

an apology letter also known as a Reconsideration Request.

You can do this from Webmaster Tools:

There is a 500 word and 2.850 character (including spaces) limit so use

this space wisely to explain what actions you’ve taken to try and clean

up your site.

Things that you should include:

- If you paid for links, then name the SEO agency you worked with to acquire links or any other similar information.

- What type of bad links you found in your profile such as sitewide links, comment spam and so on.

- What actions you’ve taken to make sure no more bad links will be created such as training employees to not buy or build links, retracting any free templates that had links in them, adding nofollow to the links in the widgets you provide.

- Link to the Google Online Spreadsheet where you’ve documented your efforts of contacting webmasters and taking down links. Make sure these documents are shared with anyone that has the link.

- Link to an online spreadsheet where you have a copy of the disavow file you’ve submitted.

- Confirmation of reading the Webmaster Guidelines, understanding them and following them from now on.

If you haven’t managed to clean up all your bad links, Google will reply

and give you examples of other bad links. You will then have to go

through the remaining links again using all the tools available and see

what other websites there are now after you’ve cleaned up most of the

other bad links.

Every month more than 400,000 manual actions are applied by Google and

every month the search engine giant processes 20,000 reconsideration

requests. Some of these reconsiderations are not the first ones sent out

by webmasters.

Don’t get discouraged if you don’t get your penalty removed on your

first try. Not many manage to do so. Just go back and repeat the process

until you manage to remove all the bad links from your profile.

How to avoid a future Google penalty

Even if your site hasn’t been penalised, don’t take a chance – go

through the guide and identify your bad links. You can follow every step

down to sending the Reconsideration Request. Since there’s no penalty

message, there is no need for this.

You can disavow the domains that are sending bad links to your site. You

should try to contact these websites first and always save every form

of communication you had with the webmasters.

If a penalty follows, you’ll already have proof that you’ve started to clean up your site.

For More Info about Penalised by Google? Here are 11 reasons why and how to recover

Google Penguin 3.0 is coming and here’s what to do to prepare

Update: Google Penguin 3.0 was launched on October 17th. As

predicted, the company confirmed the update would help sites that have

cleaned up bad link profiles in response to previous versions of

Penguin. Owners of sites that haven’t recovered or have

been adversely affected by Penguin 3.0 should follow the steps outlined

below.

There is rarely a dull moment in the world of search engine optimisation (SEO). Haven’t you heard? A huge Google algorithm change is on the way. Scary.

Last month, Google said a new Penguin update would likely be launched before the end of the year, and now it looks like it may be here this week.

Google’s Gary Illyes, Webmaster Trends Analyst and Search Quality Engineer, said at Search Marketing Expo East that Google “may” be launching a Penguin algorithm refresh sometime this week, which he described as “a large rewrite of the algorithm”. Also, Barry Schwartz from Search Engine Land believes that this next update will be all good news and that it could make webmaster’s life a bit easier.

There is rarely a dull moment in the world of search engine optimisation (SEO). Haven’t you heard? A huge Google algorithm change is on the way. Scary.

Last month, Google said a new Penguin update would likely be launched before the end of the year, and now it looks like it may be here this week.

Google’s Gary Illyes, Webmaster Trends Analyst and Search Quality Engineer, said at Search Marketing Expo East that Google “may” be launching a Penguin algorithm refresh sometime this week, which he described as “a large rewrite of the algorithm”. Also, Barry Schwartz from Search Engine Land believes that this next update will be all good news and that it could make webmaster’s life a bit easier.

Understanding the major implications of these updates is critical to

search performance, so today we’ll talk you through the upcoming release

of Google’s Penguin 3.0 update, what it is, how it can affect your

website and rankings and what you can do to prepare for its release.

What is Penguin 3.0 all about?

Over the past few weeks, Google Webmaster Trends Analyst John Mueller has been actively discussing:

What is Penguin 3.0 all about?

Over the past few weeks, Google Webmaster Trends Analyst John Mueller has been actively discussing:

- The imminent and long-anticipated Penguin update

- Google’s efforts to make its algorithm refreshes rollout quicker

- Helping websites to recover faster from ranking penalties

In a Google Webmaster Central hangout on September 12th, Mueller confirmed that the new Penguin update would roll out in 2014. In the video he said:

- Google is working on a “solution that generally refreshes faster” specifically talking about Penguin.

- He mentioned that “we are trying to speed things up” around Penguin.

- He also admitted that “our algorithms don’t reflect that in a reasonable time”, referring to webmasters’ efforts to clean up the issues around their sites being impacted by Penguin.

As you can probably tell from all the buzz on the web, Penguin 3.0 is

expected to be a major update that will hopefully enable Google to run

the algorithm more frequently. This would mean that those impacted by an

update won’t have to wait too long before seeing a refresh.

In other words, those who have taken measures to clean up their backlink

profiles should (in theory) be able to recover more quickly than in the

past.

Here’s what Mueller said in a previous Google hangout on September 8:

“That’s something where we’re trying to kind of speed things up

because we see that this is a bit of a problem when webmasters want to

fix their problems, they actually go and fix these issues but our

algorithms don’t reflect that in a reasonable time, so that’s something

where it makes sense to try to improve the speed of our algorithms

overall.”

Can a site recover from ranking penalties without an algorithm refresh?

A day after the September 8 Google+ Hangout, Mueller replied to a post in the Webmaster Central Help Forum, stating that while a Penguin refresh is required for an affected site to recover, it is possible for webmasters to improve their site rankings without a Penguin update.

Can a site recover from ranking penalties without an algorithm refresh?

A day after the September 8 Google+ Hangout, Mueller replied to a post in the Webmaster Central Help Forum, stating that while a Penguin refresh is required for an affected site to recover, it is possible for webmasters to improve their site rankings without a Penguin update.

With Google using over 200 factors in crawling, indexing and ranking,

Mueller said that if webmasters do their best to clean up site issues

and focus on having a high-quality site rather than on individual

factors of individual algorithms they may see changes even before that

algorithm or its data is refreshed.

“I know it can be frustrating to not see changes after spending a

lot of time to improve things. In the meantime, I’d really recommend not

focusing on any specific aspect of an algorithm, and instead making

sure that your site is (or becomes) the absolute best of its kind by

far,” Mueller recommended.

In the thread, “Has Google ever definitively stated that it is possible to recover from Penguin?“ Mueller replied: “Yes, assuming the issues are resolved in the meantime, with an update of our algorithm or its data, it will no longer be affecting your site.”

In the thread, “Has Google ever definitively stated that it is possible to recover from Penguin?“ Mueller replied: “Yes, assuming the issues are resolved in the meantime, with an update of our algorithm or its data, it will no longer be affecting your site.”

That’s also all the more reason for webmasters to welcome this next update with open arms.

The history of Penguin

Google launched the Penguin update in April 2012 and Penguin 3.0 would be the 6th refresh. The purpose of Penguin is to uncover spammy backlink profiles and punish sites that are violating Google’s quality guidelines by lowering their rankings in its search engine results.

The history of Penguin

Google launched the Penguin update in April 2012 and Penguin 3.0 would be the 6th refresh. The purpose of Penguin is to uncover spammy backlink profiles and punish sites that are violating Google’s quality guidelines by lowering their rankings in its search engine results.

Google launched the Penguin update in April 2012 and Penguin 3.0 would be the 6th refresh. The purpose of Penguin is to uncover spammy backlink profiles and punish sites that are violating Google’s quality guidelines by lowering their rankings in its search engine results.

Overall, what Google is trying to do is to catch and penalise websites

that are trying to rank higher in its search results through:

- Keyword stuffing meaning loading a webpage with the same words or phrases so much that is sounds unnatural

- Low quality backlinks, often generated using automated software

- A large numbers of links optimised using the exact same anchor text

- Excessive link exchange

- Forum comments with links added in the signature

- And various other link schemes.

Penguin focuses on the link-related aspects of this list.

Here’s a timeline of the previous Penguin updates:

- Penguin 1.0 – April 24 2012 (affected 3.1% of searches)

- Penguin 1.2 – May 26 2012 (affected 0.1% of searches)

- Penguin 1.3 – October 5, 2012 (affected 0.3% of searches)

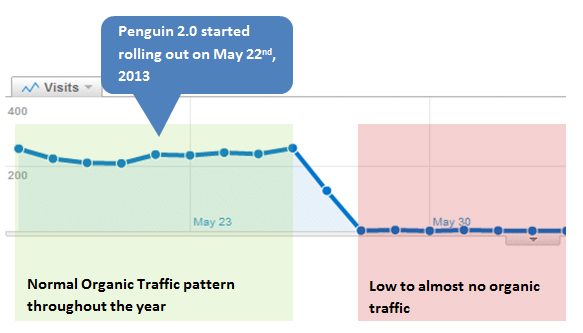

- Penguin 2.0 – May 22, 2013 (affected 2.3% of searches)

- Penguin 2.1 – October 4, 2013 (affected 1% of searches)

- Penguin 3 – October 2014?

Google’s Gary Illyes, Webmaster Trends Analyst and Search Quality Engineer, said that if you disavow bad links now or as of about two weeks ago, it may be too late for this next Penguin refresh. However, he added that since the Penguin refreshes will be more frequent you should never stop working on removing bad links.

Google’s Gary Illyes, Webmaster Trends Analyst and Search Quality Engineer, said

that if you disavow bad links now or as of about two weeks ago, it may

be too late for this next Penguin refresh. However, he added that since

the Penguin refreshes will be more frequent you should never stop

working on removing bad links.

So, here are a few things you can do to prevent your site from getting penalised by a future Penguin update:

- Do a thorough link clean-up and remove all unnatural links pointing to your site. When it comes to Penguin, bad links are usually the cause of the penalty so make sure you remove as many bad links as you possibly can and then disavow the rest. Here’s a good article with steps to find and remove unnatural links.

- Make sure your disavow file is correct. Find out more about disavowing backlinks.

- Assess the remaining “good” links. Do you still have enough valuable links for your site to rank well or do you need to build some more links? Don’t shy away from link building campaigns, just make sure that this time you build links on high-quality, relevant, authority sites.

- Other common sense actions to ensure that you have a healthy website. Read this excellent article from Moz with some great advice on how to ensure your site is healthy and how to handle life after an algorithm update, whether it’s Penguin, Panda or any other Google update.

- Don’t treat an algorithmic penalty as a manual penalty. Keep in mind that there may be other factors that might be preventing your site from ranking well, which may not be linked to an algorithmic penalty like Penguin or Panda. Check out this useful article with the complete list of reasons that may be causing your traffic to drop.

Since this is an algorithmic penalty, if your site has been hit by Penguin, Google will never notify you which means that you will need to check your site’s traffic, among other things including:

- You’re no longer ranking well for that one keyword you should always rank well for – your brand name. When this happens, it’s a clear sing you’ve been hit.

- Your site is dropping from page one to page two or three in Google’s search results although you’ve made no changes whatsoever.

- Your site has been removed from Google’s cached search results overnight.

- You get no results when you run a site search (eg: site:yourdomain.co.uk keyword).

- Google Analytics (or any other analytics tool you’re using) is showing a significant drop in organic traffic a few days after a big Google update.

Check out our YouTube video on how to tell if you’ve been penalised by Google:

So, what we recommend is that you monitor your organic traffic closely

for at least two weeks after a Penguin update. If your traffic

dramatically drops during this time, it’s likely due to the update. Also

make sure you keep an eye on Google Webmaster Tools notifications to

see if you have any manual penalties applied to your website.

If you do discover your site has been penalised, stop what you’re doing

and go fix whatever it is that has caused your site to get hit.

What can you do to recover?

If you’ve been penalised by a previous Google update, we’ve got you covered with an in-depth guide on why your site may have been hit and what you can do to recover. This guide walks you through 11 reasons that might have caused your site to get penalised as well as specific steps to follow to help you recover after you’ve been hit.

Penalised by Google?

Check out our comprehensive guide on reasons why you got penalised and ways to recover. Make sure you bookmark it and use it in the event your site gets hit by a future Google penalty.

What can you do to recover?

If you’ve been penalised by a previous Google update, we’ve got you covered with an in-depth guide on why your site may have been hit and what you can do to recover. This guide walks you through 11 reasons that might have caused your site to get penalised as well as specific steps to follow to help you recover after you’ve been hit.

Penalised by Google?

Check out our comprehensive guide on reasons why you got penalised and ways to recover. Make sure you bookmark it and use it in the event your site gets hit by a future Google penalty.

Meanwhile, keep in mind that there are over 200 factors that influence

rankings for a site. It’s not enough to focus on one algorithm and fix

those specific issues but instead look at the bigger picture.

Search engines reward sites that provide searchers with the most

informative, interesting and relevant content for their search queries

and with the best user experience.

So while it’s ok to prioritise site optimisation and quickly fix certain

issues that may arise, it’s even more important to put your users first

and provide them with the best information and website experience they

can ask for.

Labels:

google penguine 3.0,

nilesh patel,

penguine updates

Tuesday, September 30, 2014

Panda 4.1-Google’s 27th Panda Update-Is Rolling Out

Google has announced that the latest version of its Panda Update — a filter designed to penalize “thin” or poor content from ranking well — has been released.

Google said in a post on Google+

that a “slow rollout” began earlier this week and will continue into

next week, before being complete. Google said that depending on

location, about 3%-to-5% of search queries will be affected.

Anything different about this latest release? Google says it’s supposed

to be more precise and will allow more high-quality small and

medium-sized sites to rank better. From the post:

Based on user (and webmaster!) feedback, we’ve been able to discover a

few more signals to help Panda identify low-quality content more

precisely. This results in a greater diversity of high-quality small-

and medium-sized sites ranking higher, which is nice.

New Chance For Some; New Penalty For Others

The rollout means anyone who was penalized by Panda in the last update has a chance to emerge, if they made the right changes. So if you were hit by Panda, made alterations to your site, you’ll know by the end of next week if those were good enough, if you see an increase in traffic.

New Chance For Some; New Penalty For Others

The rollout means anyone who was penalized by Panda in the last update has a chance to emerge, if they made the right changes. So if you were hit by Panda, made alterations to your site, you’ll know by the end of next week if those were good enough, if you see an increase in traffic.

The rollout also means that new sites not previously hit by Panda might

get impacted. If you’ve seen a sudden traffic drop from Google this

week, or note one in the coming days, then this latest Panda Update is

likely to blame.

About That Number

Why are we calling it Panda 4.1? Well, Google itself called the last one Panda 4.0 and deemed it a major update. This isn’t as big of a change, so we’re going with Panda 4.1.

About That Number

Why are we calling it Panda 4.1? Well, Google itself called the last one Panda 4.0 and deemed it a major update. This isn’t as big of a change, so we’re going with Panda 4.1.

We actually prefer to number these updates in the order that they’ve

happened, because trying to determine if something is a “major” or

“minor” Panda Update is imprecise and lead to numbering absurdities like having a Panda 3.92 Update.

But since Google called the last one Panda 4.0, we went with that name —

and we’ll continue on with the old-fashioned numbering system unless it

gets absurd again.

For the record, here’s the list of confirmed Panda Updates, with some of

the major changes called out with their AKA (also known as) names:

- Panda Update 1, AKA

Panda 1.0, Feb. 24, 2011 (11.8% of queries; announced; English in US only) - Panda Update 2, AKA

Panda 2.0, April 11, 2011 (2% of queries; announced; rolled out in English internationally) - Panda Update 3, May 10, 2011 (no change given; confirmed, not announced)

- Panda Update 4, June 16, 2011 (no change given; confirmed, not announced)

- Panda Update 5, July 23, 2011 (no change given; confirmed, not announced)

- Panda Update 6, Aug. 12, 2011 (6-9% of queries in many non-English languages; announced)

- Panda Update 7, Sept. 28, 2011 (no change given; confirmed, not announced)

- Panda Update 8 AKA

Panda 3.0, Oct. 19, 2011 (about 2% of queries; belatedly confirmed) - Panda Update 9, Nov. 18, 2011: (less than 1% of queries; announced)

- Panda Update 10, Jan. 18, 2012 (no change given; confirmed, not announced)

- Panda Update 11, Feb. 27, 2012 (no change given; announced)

- Panda Update 12, March 23, 2012 (about 1.6% of queries impacted; announced)

- Panda Update 13, April 19, 2012 (no change given; belatedly revealed)

- Panda Update 14, April 27, 2012: (no change given; confirmed; first update within days of another)

- Panda Update 15, June 9, 2012: (1% of queries; belatedly announced)

- Panda Update 16, June 25, 2012: (about 1% of queries; announced)

- Panda Update 17, July 24, 2012:(about 1% of queries; announced)

- Panda Update 18, Aug. 20, 2012: (about 1% of queries; belatedly announced)

- Panda Update 19, Sept. 18, 2012: (less than 0.7% of queries; announced)

- Panda Update 20 , Sept. 27, 2012 (2.4% English queries, impacted, belatedly announced

- Panda Update 21, Nov. 5, 2012 (1.1% of English-language queries in US; 0.4% worldwide; confirmed, not announced)

- Panda Update 22, Nov. 21, 2012 (0.8% of English queries were affected; confirmed, not announced)

- Panda Update 23, Dec. 21, 2012 (1.3% of English queries were affected; confirmed, announced)

- Panda Update 24, Jan. 22, 2013 (1.2% of English queries were affected; confirmed, announced)

- Panda Update 25, March 15, 2013 (confirmed as coming; not confirmed as having happened)

- Panda Update 26 AKA

Panda 4.0, May 20, 2014 (7.5% of English queries were affected; confirmed, announced) - Panda Update 27 AKA

Panda 4.1, Sept. 25, 2014 (3-5% of queries were affected; confirmed, announced)

The latest update comes four months after the last, which suggests that

this might be a new quarterly cycle that we’re on. Panda had been

updated on a roughly monthly basis during 2012. In 2013, most of the

year saw no update at all.

Of course, there could have been unannounced releases of Panda that have

happened. The list above is only for those that have been confirmed by

Google.

For More Information about Panda 4.1-Google’s 27th Panda Update-Is Rolling Out

Tuesday, September 16, 2014

Beginner's Guide To Web Data Analysis: Ten Steps To Success

Secret To Winning Web Analytics: 10 Starting Points For A Fabulous Start!

I want to share where in your web analytics data you can find valuable

starting points, even without any context about the site / business /

priorities. Reports to look at, KPIs to evaluate, inferences to make.

Here's what we are going to cover:

Step #1: Visit the website. Note objectives, customer experience, suckiness.

Step #1: Visit the website. Note objectives, customer experience, suckiness.

Step #2: How good is the acquisition strategy? Traffic Sources Report.

Step #3: How strongly do Visitors orbit the website? Visitor Loyalty & Recency.

Step #4: What can I find that is broken and quickly fixable? Top Landing Pages.

Step #5: What content makes us most money? $Index Value Metric.

Step #6: How Sophisticated Is Their Search Strategy? Keyword Tag Clouds.

Step #7: Are they making money or making noise? Goals & Goal Values.

Step #8: Can the Marketing Budget be optimized? Campaign Conversions/Outcomes.

Step #9: Are we helping the already convinced buyers? Funnel Visualization.

Step #10: What are the unknown unknowns I am blind to? Analytics Intelligence.

The first time you go through the steps outlined in this guide it might

take you more than 120 minutes. But I promise you that with time and

experience you'll get better.

Thursday, July 17, 2014

The World’s Quickest (Authentic) SEO & Marketing Audit In 12+1 Steps

I am, according to those who know me, a very structured person. In order

to function, I have to live by spreadsheets, task lists and processes.

Without them, I’m lost. It’s just life. Isn’t everyone like that?

But despite my obsession with structure and process, I don’t like giving

clients a one-size-fits-all web marketing campaign. It just doesn’t

make sense. Every website is different and has different needs;

therefore, the online marketing plan will have to be different as well.

The problem is, you really can’t know what any particular site’s needs

are until after you’ve gone through and performed a thorough site audit —

and that can take upwards of 5-20 hours depending on the site.

We can’t really give away several hundreds of dollars worth of work each

time we get a request for a proposal — but we won’t do cookie-cutter,

either. Quite the conundrum!

I’m sure we are not all that different from many other SEOs that will

perform a quick assessment of a site in order to provide some specific

feedback to the prospect. But we always want to make sure our

assessments are meaningful. We don’t want to just say, “Hey, look at us,

we know something!” We want to put together a proposal that addresses

many of their marketing needs, so they understand that we truly have a

grasp of what needs to be done.

Yeah, we could run the site through a couple tools that spit out some basic SEO information, but they can — and likely do –

get that from anyone else. Instead, why not put a bit more effort into

your initial audits, without breaking the bank on time?

12+1 Website Audit Steps

Below are 12 key SEO/marketing areas to assess — plus a quick PPC review — when drafting a proposal for prospective clients (or for any reason, really). When reviewing each of these areas, you should be able to uncover some definite actionable tasks and get a broader understanding of the site’s overall marketing needs.

1. Keyword Focus

One of the first things to look at is the overall keyword optimization of the site. Some sites have done a decent job writing good title tags and meta descriptions — others, not so much. Look through several pages of the site, glancing at tags, headings and content to see if keywords are a factor on those pages or if the site is pretty much a blank slate requiring some hardcore keyword optimization.

2. Architectural Issues

Next, look at global architectural issues. Things you can look at quickly are broken links (run a tool while you’re doing other assessments), proper heading tag usage, site and page spiderability, duplicate content issues, etc. None of these take too much time and can be assessed pretty quickly. Some of the solutions for these are quick and some aren’t; and undoubtedly, once you start digging deeper you’ll find a lot more issues later.

3. Navigation Issues

Does the navigation make sense for the site? Look to see if it’s too convoluted or maybe even too simplistic. You want visitors to easily find what they are looking for without being overloaded with choices and options. Determine if the navigation needs some tweaking or all-out revamping.

4. Category Page Optimization

Product category pages can have all kinds of problems, from poorly implemented product pagination to a lack of unique content. Look at each of these pages from the perspective of value and determine if a visitor or search engine will find any unique value on the page. You might need to add some content, product filtering options, or better product organization to make the page better for both visitors and search engines alike.

5. Product Page Optimization

Product pages can be tricky. Some searchers might look for a product name, a product number or a specific description of what the product can do. Make sure your product page content addresses each of these types of information searchers. You want to make sure the content of the product pages is largely unique, not just on your site, but across the web, as well. If not, there may be a lot of work ahead of you.

6. Local Optimization: Off-Site

Sites that are local, rather than national, have an entirely different set of criteria to analyze. For local sites, you need to see if they are doing a good job with their citations, maps, listings and other off-page signals. You don’t have to do an exhaustive check; a quick look at some of the main sites that assist with local signals should do.

7. Local Optimization: On-Site

Aside from off-page local signals, you should also look at the on-page optimization of local keywords. This often goes one of two ways: either there is very little local optimization on the page or far too much, with tons of local references crammed into titles, footers and other areas of the site. Assess the changes you’ll need to make, either way, to get the site where it needs to be.

8. Inbound Links

No assessment would be complete without at least looking at the status of the site’s inbound links, though you’ll have to dig a bit to get some information on the quality of the links coming in. It helps to do the same for a competitor or two so you have some basis of comparison. With that, you’re better able to see what needs to be done to compete sufficiently.

9. Internal Linking

Internal linking can be an issue, outside of navigation. Is the site taking advantage of opportunities to link to their own pages within the content of other pages? Rarely does each page of a site stand alone, but instead should be a springboard of driving traffic to the next page or pages based on the mutual relevance of the content.

10. Content Issues

This is a bit more of an in-depth look at the site’s content overall. It’s not about the amount of content, but the quality of the content throughout the site. Assessing the content’s value will help you identify problem pages and determine whether there is a need to establish an overall content strategy.

11. Social Presence

Social presence matters, so jump in and see where the brand stands in the social sphere. Do they have social profiles established? Is there active engagement on those profiles? Is social media being used as an educational tool or as a promotional tool? These things matter a great deal, especially when determining the course of action that needs to be taken.

12. Conversion Optimization Issues

Web marketing is not all about traffic. If you’re getting traffic but not conversions, then it doesn’t matter how good the “SEO” is. Look through the site for obvious conversion and usability issues that need to be fixed or improved. Just about every site can use conversion optimization, it’s more obvious (and urgent) for some sites than others. This assessment helps you determine if your time is better spent here or somewhere else.

Bonus: PPC Issues

The items above primarily deal with website and optimization issues. But if a PPC campaign is running, take a look at that and make sure it was set up and is being executed optimally. Many people don’t believe PPC can be profitable. Most of the time it’s not, but only because of poor management. If there is room for improvement with PPC, you’ll want to know.

It’s Just A Starting Point

Of course, you can spend hours assessing each of these areas, but that’s not the point. A quick 5-10 minute look into each of these areas can give you a wealth of information that you can use to improve the site.

12+1 Website Audit Steps

Below are 12 key SEO/marketing areas to assess — plus a quick PPC review — when drafting a proposal for prospective clients (or for any reason, really). When reviewing each of these areas, you should be able to uncover some definite actionable tasks and get a broader understanding of the site’s overall marketing needs.

1. Keyword Focus

One of the first things to look at is the overall keyword optimization of the site. Some sites have done a decent job writing good title tags and meta descriptions — others, not so much. Look through several pages of the site, glancing at tags, headings and content to see if keywords are a factor on those pages or if the site is pretty much a blank slate requiring some hardcore keyword optimization.

2. Architectural Issues

Next, look at global architectural issues. Things you can look at quickly are broken links (run a tool while you’re doing other assessments), proper heading tag usage, site and page spiderability, duplicate content issues, etc. None of these take too much time and can be assessed pretty quickly. Some of the solutions for these are quick and some aren’t; and undoubtedly, once you start digging deeper you’ll find a lot more issues later.

3. Navigation Issues

Does the navigation make sense for the site? Look to see if it’s too convoluted or maybe even too simplistic. You want visitors to easily find what they are looking for without being overloaded with choices and options. Determine if the navigation needs some tweaking or all-out revamping.

4. Category Page Optimization

Product category pages can have all kinds of problems, from poorly implemented product pagination to a lack of unique content. Look at each of these pages from the perspective of value and determine if a visitor or search engine will find any unique value on the page. You might need to add some content, product filtering options, or better product organization to make the page better for both visitors and search engines alike.

5. Product Page Optimization

Product pages can be tricky. Some searchers might look for a product name, a product number or a specific description of what the product can do. Make sure your product page content addresses each of these types of information searchers. You want to make sure the content of the product pages is largely unique, not just on your site, but across the web, as well. If not, there may be a lot of work ahead of you.

6. Local Optimization: Off-Site

Sites that are local, rather than national, have an entirely different set of criteria to analyze. For local sites, you need to see if they are doing a good job with their citations, maps, listings and other off-page signals. You don’t have to do an exhaustive check; a quick look at some of the main sites that assist with local signals should do.

7. Local Optimization: On-Site

Aside from off-page local signals, you should also look at the on-page optimization of local keywords. This often goes one of two ways: either there is very little local optimization on the page or far too much, with tons of local references crammed into titles, footers and other areas of the site. Assess the changes you’ll need to make, either way, to get the site where it needs to be.

8. Inbound Links

No assessment would be complete without at least looking at the status of the site’s inbound links, though you’ll have to dig a bit to get some information on the quality of the links coming in. It helps to do the same for a competitor or two so you have some basis of comparison. With that, you’re better able to see what needs to be done to compete sufficiently.

9. Internal Linking

Internal linking can be an issue, outside of navigation. Is the site taking advantage of opportunities to link to their own pages within the content of other pages? Rarely does each page of a site stand alone, but instead should be a springboard of driving traffic to the next page or pages based on the mutual relevance of the content.

10. Content Issues

This is a bit more of an in-depth look at the site’s content overall. It’s not about the amount of content, but the quality of the content throughout the site. Assessing the content’s value will help you identify problem pages and determine whether there is a need to establish an overall content strategy.

11. Social Presence

Social presence matters, so jump in and see where the brand stands in the social sphere. Do they have social profiles established? Is there active engagement on those profiles? Is social media being used as an educational tool or as a promotional tool? These things matter a great deal, especially when determining the course of action that needs to be taken.

12. Conversion Optimization Issues

Web marketing is not all about traffic. If you’re getting traffic but not conversions, then it doesn’t matter how good the “SEO” is. Look through the site for obvious conversion and usability issues that need to be fixed or improved. Just about every site can use conversion optimization, it’s more obvious (and urgent) for some sites than others. This assessment helps you determine if your time is better spent here or somewhere else.

Bonus: PPC Issues

The items above primarily deal with website and optimization issues. But if a PPC campaign is running, take a look at that and make sure it was set up and is being executed optimally. Many people don’t believe PPC can be profitable. Most of the time it’s not, but only because of poor management. If there is room for improvement with PPC, you’ll want to know.

It’s Just A Starting Point

Of course, you can spend hours assessing each of these areas, but that’s not the point. A quick 5-10 minute look into each of these areas can give you a wealth of information that you can use to improve the site.

This is the starting point, but as you dive into each of these areas,

more opportunities will arise. The point is, you have to start

somewhere. This is the most authentic quick SEO and marketing review you

can give, without getting lost in the details or in an endless pit of

time.

For More Information about worlds-quickest-authentic-seo-marketing-audit

Thursday, May 22, 2014

Google Launches Panda 4.0

It's official. Panda 4.0 has officially hit the Google search results.

The Panda algorithm, which was designed to help boost great-quality

content sites while pushing down thin or low-quality content sites in

the search results, has always targeted scraper sites and low-quality

content sites in order to provide searchers with the best search results

possible

Panda 4.0, which Google and Matt Cutts confirmed began rolling out

yesterday, has definitely caused some waves in the search results.

However, the fact that Panda 4.0 is also hitting at the same time as a

refresh of an algorithm targeting spammy queries like payday loans makes

it a little bit more difficult for webmasters to sort through the

damage.

With the payday loans refresh impacting extremely spammy queries and

Panda targeting low-quality content, some webmasters could potentially

get hit with both of these or by just one or the other.

While usually people complain about losing rankings, Alan Bleiweiss from AlanBleiweiss.com showed via Twitter that Panda can also bring about some serious improvement to a site's traffic as well.

Prior to Cutts confirming via Twitter

that Panda 4.0 hit, there had been plenty of speculation about a change

with either Panda or Penguin, although many found it hard to pin down

exactly what the change was.

"It was interesting to see the chatter amongst SEOs over the last few days," says Marie Haynes of HIS Web Marketing.

"We all knew that something was going on, but there was significant

disagreement on exactly what Google was doing. Most of the black hats

were saying that it was related to Penguin because they were noticing

huge drops in their sites with the spammiest link profiles."

This would have been the changes to the payday loans update, where the

goal is to target the spammiest search queries Google sees. There was

also plenty of debate on forums and Twitter about whether the shake-up

we saw was Panda-related or Penguin-related. And with the payday loans

update thrown in there for good measure, it was definitely confusing.

"I was really hoping that this wasn't a Penguin refresh because

the majority of the sites that I had done link cleanup on were not

seeing any improvement and if Penguin refreshed and none of my clients

were seeing ranking increases, then that would not be good," Haynes

says. "I was also puzzled because one site that I monitor that has been

adversely affected by several Panda updates saw a vast improvement

today. It does not have a bad link profile and would not have been

affected by a Penguin update. "

"When I heard the announcements that Google had released two algorithms

over the last couple of days – payday loans and also a big Panda update,

everything made sense. And still, so many of us are waiting for that

big Penguin refresh. It wouldn't surprise me if that happens in the next

couple of days," she says.

Google has said previously that they try not to throw multiple updates

at once or within a very short period of time, so it is interesting that

they pushed out two completely unrelated updates – although updates

that could both impact some the same sites – within a couple of days.

Releasing two back-to-back updates makes it a lot harder for webmasters

to analyze the changes and what specifically was targeted in these

updates, and could affect Google's ability to evaluate how well (or not)

the two updates worked. It also means webmasters might have a challenge

knowing what changes need to be made in order to recover search

rankings.

However, Haynes speculated that some of the Panda change might have been

to reverse some of the damage done to sites that had great content yet

were impacted for other on-page reasons.

"I think it's too early to say what this new iteration of Panda is

affecting, but I can tell you that in the case of the site that I saw

big improvements on, no cleanup work had been done at all," Haynes says.

"It was always in 'Panda flux.'"

She says some Panda refreshes would hit it hard and then occasionally the site would show a slight increase with a refresh.

"This is by far the biggest increase, though," Haynes says. "It really

is a good-quality site and I believe that what caused it to be affected

by Panda in the first place was a site layout that caused crawlers to

get confused. Underneath that confusing layout is a site full of really

good content. It may be that Google found ways to see past structural

issues and recognize the good content as the structural problems would

not affect users, just crawlers. But really, that's a guess."

Panda first blazed onto the scene in February 2011,

and was believed to be Google's response to criticism of how well

content farms were ranking. Google later announced that they internally

called it the big Panda update, after Navneet Panda, the Google engineer

who created it.

While there have been many smaller Panda updates and a couple larger ones, each rolled out monthly over a period of 10 days, Google had announced several months ago that Panda would be integrated into the algorithm updates and that the "softer" Panda

would be less noticeable. However, that clearly isn't the case with

Panda 4.0, which has had a widespread impact, both positively and

negatively.

Digging into the May 19th data (and before Google confirmed anything), I noticed that a few keywords seemed to show losses for eBay, and the main eBay sub-domain fell completely out of the "Big 10" (our metric of the ten domains with the most "real estate" in the top 10).In fact, Meyers notes that eBay's drop was so significant that it dropped from 1 percent of their queries to 0.28 percent. It won't be surprising to hear of more sites who have seen a drop due to Panda 4.0 – especially sites that have been notorious for having low quality content.

We will definitely see more come out in the next few days as webmasters and SEO professionals have a chance to evaluate the changes and what might have caused sites (either their own or competitors) to either rise or fall in Google's search results. And since we're still awaiting a Google Penguin update (Penguin 2.0 launched a year ago this week), I wouldn't be surprised if we see one of those updates coming soon, especially in light of Google releasing both the payday loans and Panda 4.0 so close together.

For More Information about Google Launches Panda 4.0

Subscribe to:

Comments (Atom)